-

For example, both Microsoft and Apple hire big>gest amount of people to teams who will be developing systems that digg through your files in their clouds (nice term for this - textual and visual based AI). Analyzing stuff, figuring that is risky for company where you work or for ruling class in general, reporting all real progress to your boss (company will have special rate to buy info about you). It will also check all video files and photo files, all names of people (via faces) and content will be collected indefinitely, reported and sold.

Another thing I hear is that both companies already have AI that can check all major file formats and reject any encrypted files or archives. Idea is to finally get into bureaucracy dream land, where files are encrypted but they know the key (both Microsoft and Apple clouds already work such - both lack any private encryption).

Both clouds also have very interesting new strategies. Like I already heard that bank insisted on medium (around 150 people) US company to move into specific cloud in order to get new round of credit."

@Vitaliy_Kiselev those are all valid societal concerns, I suppose, with regards to surveillance taking over our lives and tech monopolies and what-not. The fact remains, however that this is a nice step for blazing fast and intuitive non-linear-editing of video in extremely hi-res formats for $1,000 or less, which seems like a step in the right direction from a filmmaking perspective.

A kid with an iPhone and a MacBook is Coppola's proverbial 'fat kid in ohio' example, now, and in theory great things can come of that. I suppose in practice, it'll be like the DSLR revolution, a few folks made features that might not have otherwise, mostly no one did shit except YouTube camera tests. Plus we can throw in your dystopian tech company controlled world to boot.

Still, though, haha...they're damn fast and cheap for Apple, and FCPX is life changing in ways people still don't get.

-

The fact remains, however that this is a nice step for blazing fast and intuitive non-linear-editing of video in extremely hi-res formats for $1,000 or less

Presstitutes and mass media already told you that around thousand times, don't they?

Things I am talking are most important, small performance gain is not.

And I have no idea why I will want to edit even 4K video on small laptop until I am in some distant trip in hotel (COVID mostly taken care about this one option).

-

Talked to engineer who worked in Taiwan, including design of older MB motherboards

He told that Apple made biggest and most staggering error. As tactically it can look as small win, but in the long term Apple will be required to keep two fully separate lineups - for notebooks where progress will stop fast just due to issues with smaller processes and for desktops where Apple will need fully parallel x86 software.

He is afraid that present Apple managers want to abandon all pro desktop market as Apple have absolutely no ability to make and update multiple top desktop class CPUs (as they are very costly to make and Apple hold tiny niche only).

On other hand we know that Apple have quite big engineering and developers team working on mobile Ryzen based notebooks and desktops. We can see notebooks with 16 core H class from 45 to 90 watts CPU as soon as next year.

-

@radikalfilm Not to hijack this thread but take a look at this project:

https://www.cubbit.io/one-time-payment-cloud-storage

https://www.hackster.io/news/take-advantage-of-distributed-cloud-storage-with-cubbit-357b4498ade4

-

This is that all talk about

Apple Inc. is planning a series of new Mac processors for introduction as early as 2021 that are aimed at outperforming Intel Corp.’s fastest.

Chip engineers at the Cupertino, California-based technology giant are working on several successors to the M1 custom chip, Apple’s first Mac main processor that debuted in November. If they live up to expectations, they will significantly outpace the performance of the latest machines running Intel chips, according to people familiar with the matter who asked not to be named because the plans aren’t yet public.

Apple’s M1 chip was unveiled in a new entry-level MacBook Pro laptop, a refreshed Mac mini desktop and across the MacBook Air range. The company’s next series of chips, planned for release as early as the spring and later in the fall, are destined to be placed across upgraded versions of the MacBook Pro, both entry-level and high-end iMac desktops, and later a new Mac Pro workstation.

The road map indicates Apple’s confidence that it can differentiate its products on the strength of its own engineering and is taking decisive steps to design Intel components out of its devices. The next two lines of Apple chips are also planned to be more ambitious than some industry watchers expected for next year. The company said it expects to finish the transition away from Intel and to its own silicon in 2022.

The current M1 chip inherits a mobile-centric design built around four high-performance processing cores to accelerate tasks like video editing and four power-saving cores that can handle less intensive jobs like web browsing. For its next generation chip targeting MacBook Pro and iMac models, Apple is working on designs with as many as 16 power cores and four efficiency cores.

Apple could choose to first release variations with only eight or 12 of the high-performance cores enabled depending on production. Chipmakers are often forced to offer some models with lower specifications than they originally intended because of problems that emerge during fabrication.

For higher-end desktop computers, planned for later in 2021 and a new half-sized Mac Pro planned to launch by 2022, Apple is testing a chip design with as many as 32 high-performance cores.

Apple engineers are also developing more ambitious graphics processors. Today’s M1 processors are offered with a custom Apple graphics engine that comes in either 7- or 8-core variations. For its future high-end laptops and mid-range desktops, Apple is testing 16-core and 32-core graphics parts.

For later in 2021 or potentially 2022, Apple is working on pricier graphics upgrades with 64 and 128 dedicated cores aimed at its highest-end machines, the people said. Those graphics chips would be several times faster than the current graphics modules Apple uses from Nvidia and AMD in its Intel-powered hardware.

Interesting Bloomberg article mostly written by Apple PR team, they do it regularly in last 2 years.

Apple feels bad vibes of big corporations and clients and they need to shut this up while their teams are urgently thinking on solution.

But here comes the hefty price of the approach that Apple now going, any small error, issue on TSMC and it'll be disaster.

Even this year, if not extreme attack on Huawei, Apple won't be able to get any volume of M1 chips. In reality all 100% of M1 production lines and 35% of iPhone 12 chips lines are the lines that Huawei paid for and reserved at TSMC.

-

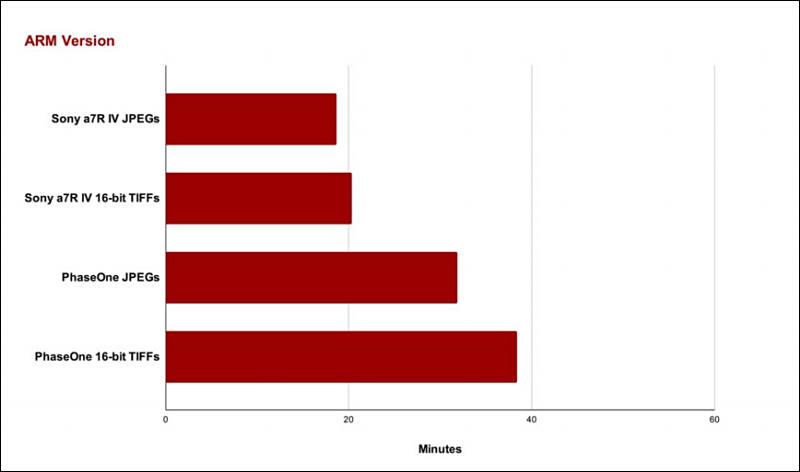

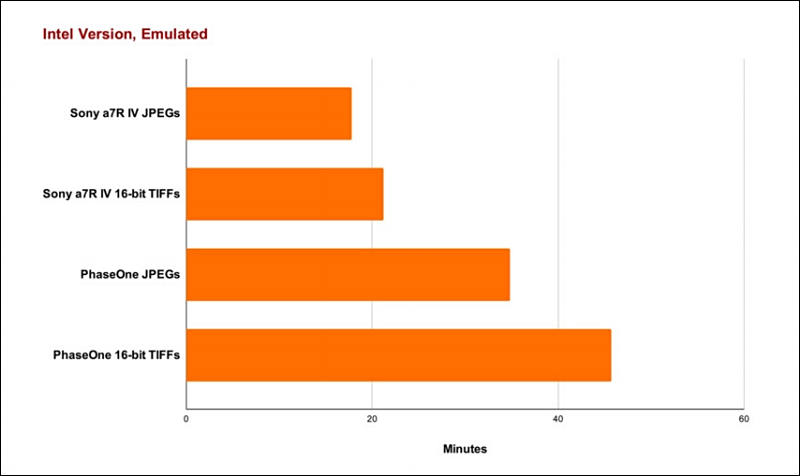

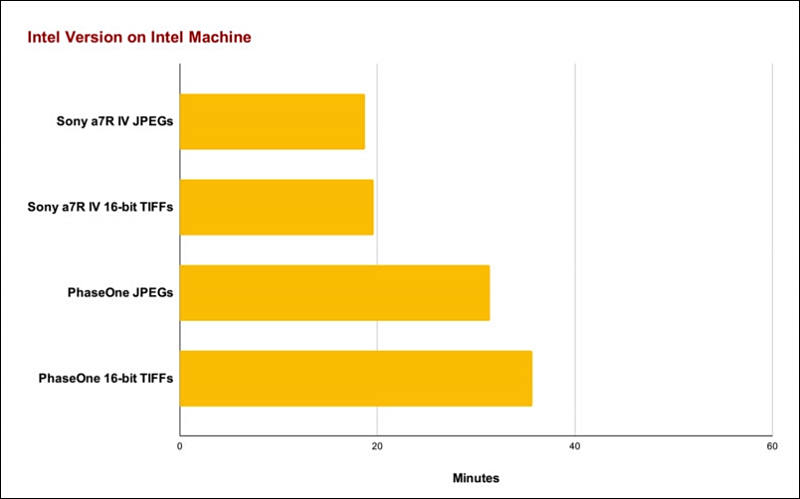

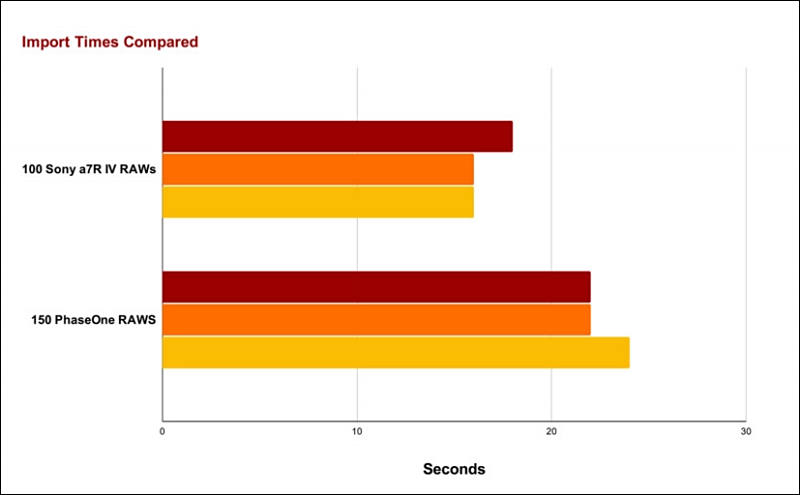

More down to earth results - Adobe Lightroom

https://petapixel.com/2020/12/08/benchmarking-performance-lightroom-on-m1-vs-rosetta-2-vs-intel/

This is interesting, as it is sample of app that do not use fake GPU help (that add up lot of "performance" in other benchmarks) during CPU operations and do not use newer video import/export engines. And results are not good, it seems like M1 CPU by itself without big help from manual optimization do not add a lot, contrary to APple claims.

sa15765.jpg800 x 472 - 28K

sa15765.jpg800 x 472 - 28K

sa15766.jpg800 x 476 - 30K

sa15766.jpg800 x 476 - 30K

sa15768.jpg800 x 499 - 30K

sa15768.jpg800 x 499 - 30K

sa15767.jpg800 x 495 - 32K

sa15767.jpg800 x 495 - 32K -

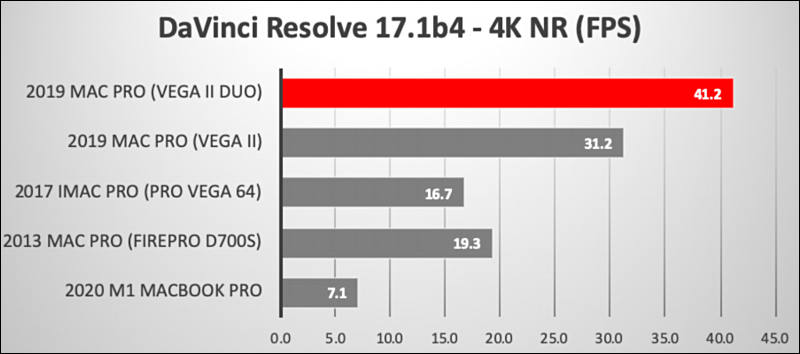

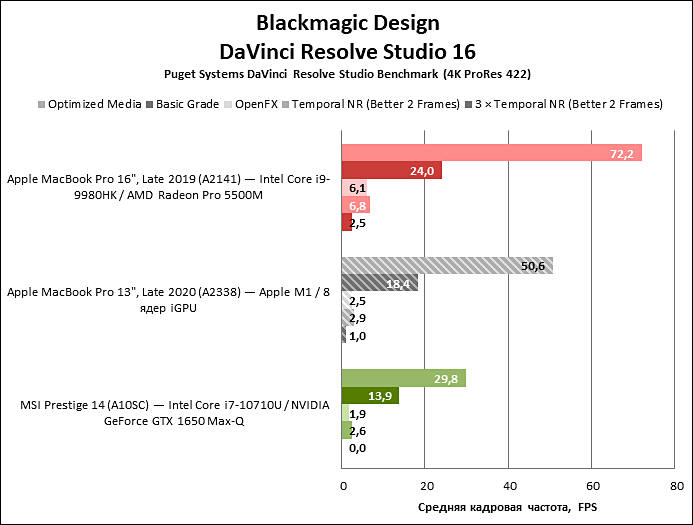

A test on Davinci Resolve. I would like to see some test with a Macbook Pro with eGpu.

https://barefeats.com/m1-macbook-pro-vs-other-macs-davinci.html

-

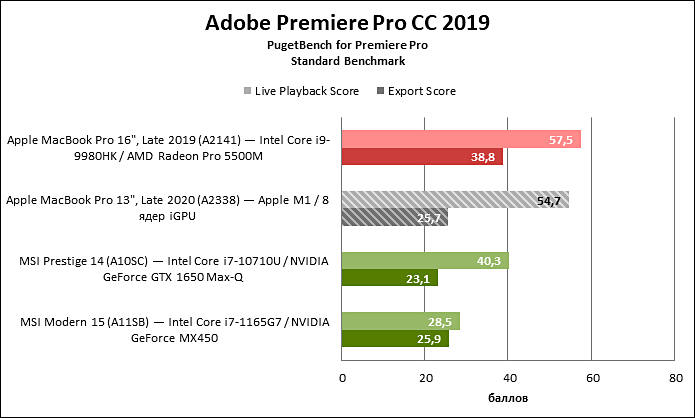

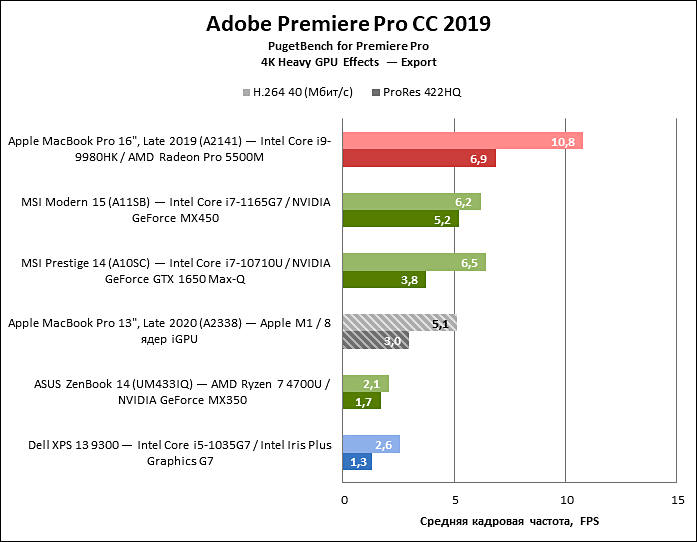

Resolve test is really bad as it has no relation to real projects and work.

Piget guys do even worse things, like if they want proof how you need 2 GPUs and 32 core CPU they just put 20 abnormal selected nodes, like 5 blurs,3 noise reductions one after another and so on, and test it all in the least compressed Sony 8K raw files (to make it much less optimized than RED). All this while project lacks any cuts, normal real life color grading and so on.

Because if they will make it real life project in 4K or 1080p, normal life very few nodes, more tracks, mostly simple cuts and so on and H.264 or HEVC export - it will be all bad for selling top stuff.

sa15775.jpg800 x 354 - 35K

sa15775.jpg800 x 354 - 35K -

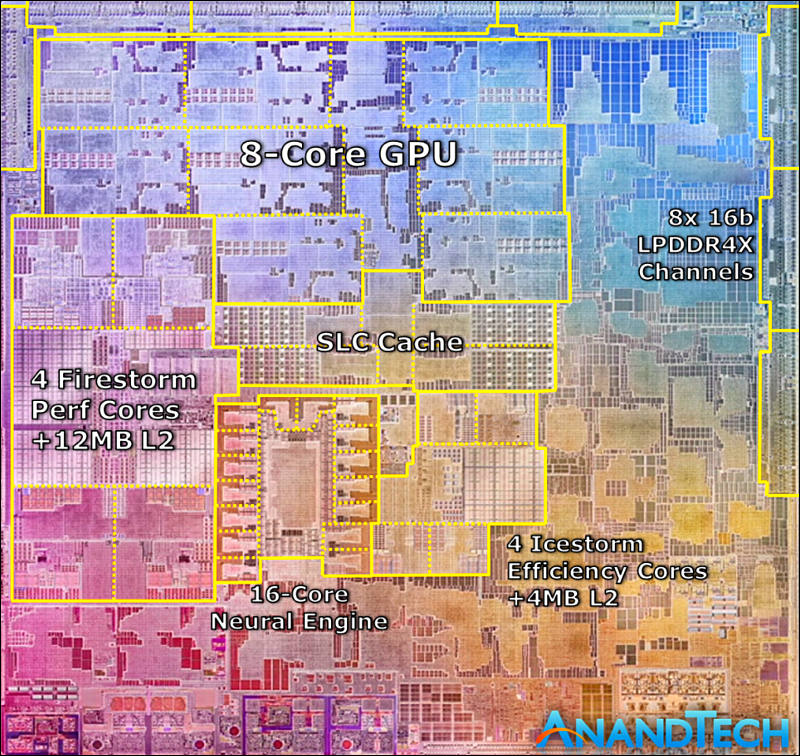

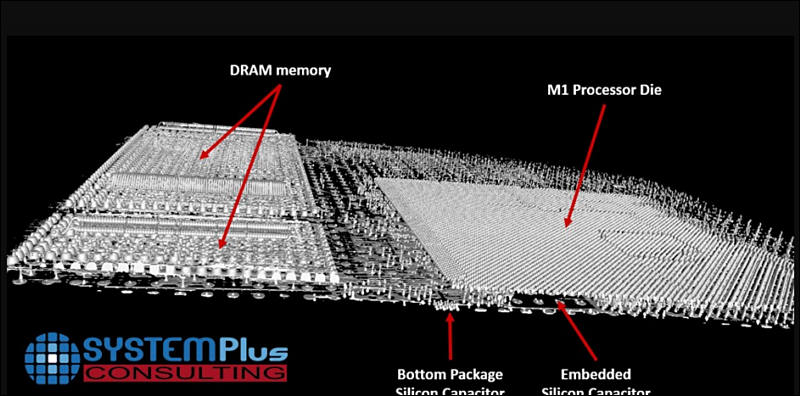

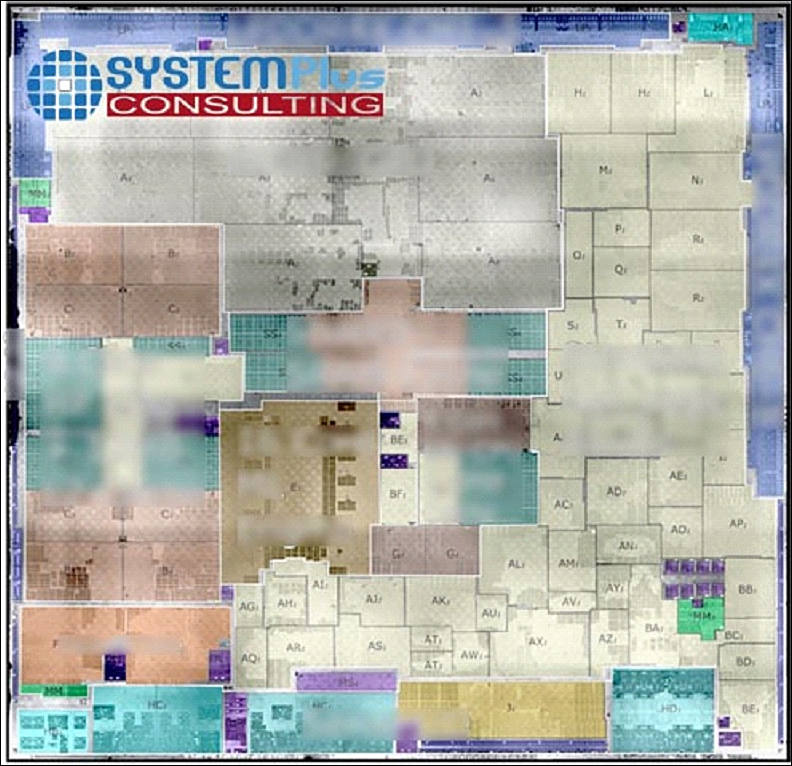

M1 CPU structure and on M1 RAM

Note that M1 CPU actually not only use much faster RAM chips, but also has double width bus.

So compared to double channel DDR4L-2400 typical Windows note this one has 3.5x more throughput.

It is very big.

sa15774.jpg800 x 756 - 196K

sa15774.jpg800 x 756 - 196K -

Rumors from TSMC

Apple can be getting 100% of TSMC made 3nm chips for up to 12 months. No other company will have any significant part (except test batches and very custom low amount chips).

For Apple 3nm is now absolutely required.

-

Small fun thing from inside of Apple, from dev

One of marketing guys proudly told (in small private Zoom conf) that they managed to force all their related bloggers and sites to write about M1 only as 8 core CPU (as first 1-2 mentions and important places), not like 4 + 4 weak cores CPU.

This is one out of eight important points that they checked before any publication. Breaking this points and insisting to not follow them means that Apple stopped including you into privileged list who get early hardware and access to info, few top sites could also loose marketing Apple budgets.

One other point of this eight I know is mentioning of x86 compatibility, publication must never mention any serious issues, must not have any part of problem running full x86 Windows and must be very fuzzy here.

-

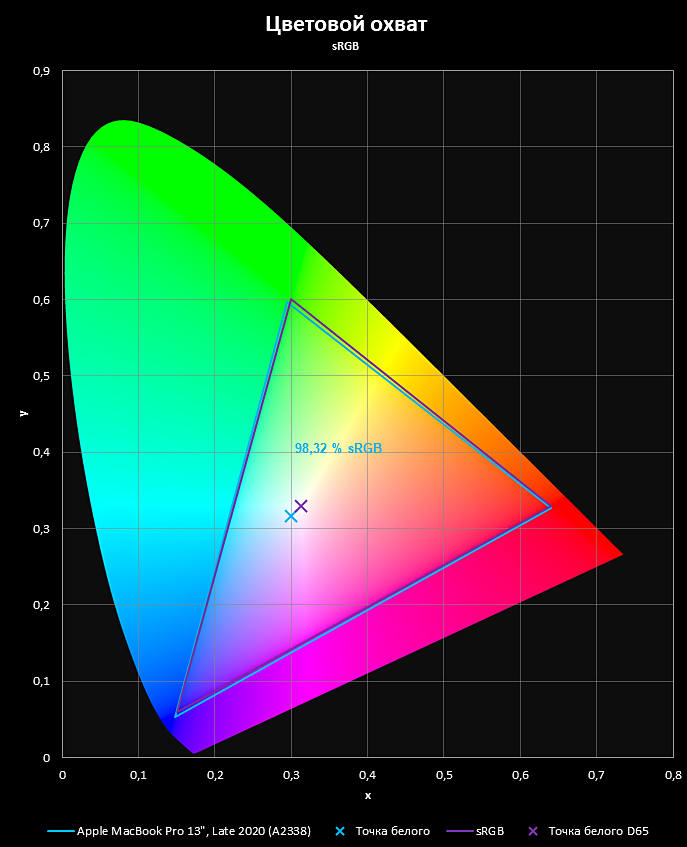

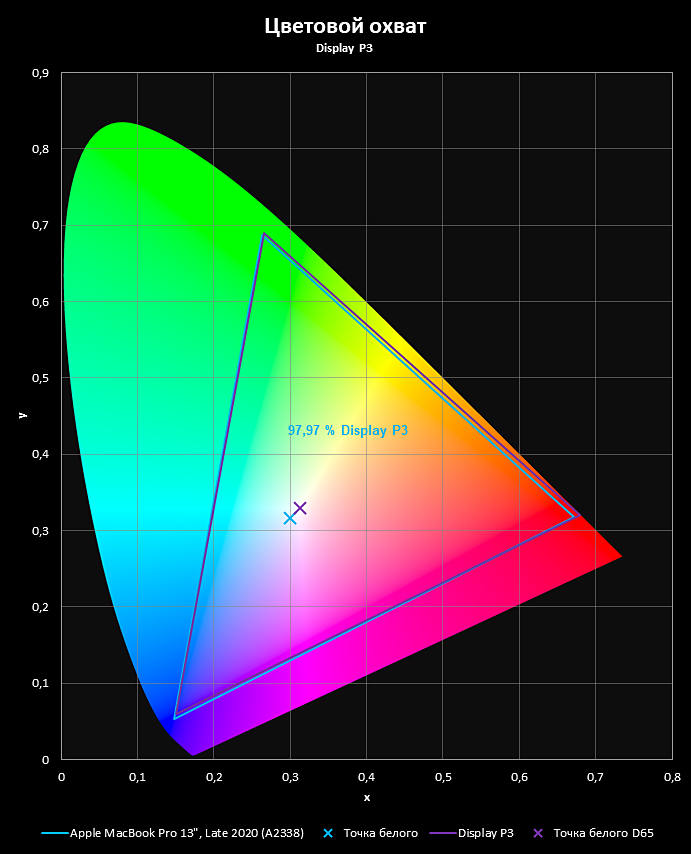

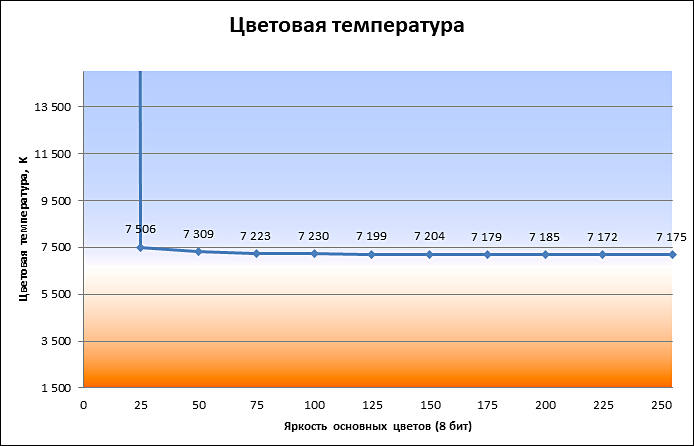

M1 notebooks got first bad for video editing Apple screens

To show energy efficiency Apple changed backlight so it gets more of original uncovered leds blue peak.

This shifted white point, bad for editing.

sa15778.jpg687 x 847 - 71K

sa15778.jpg687 x 847 - 71K

sa15779.jpg691 x 854 - 72K

sa15779.jpg691 x 854 - 72K

sa15780.jpg694 x 446 - 41K

sa15780.jpg694 x 446 - 41K -

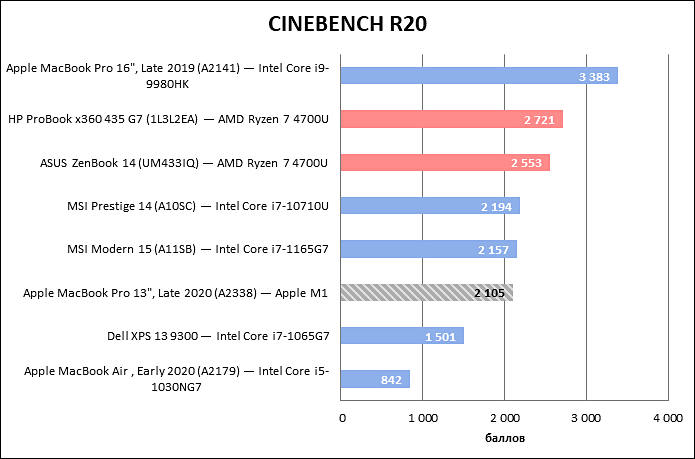

More benchmarks

As expected we see Puget benchmarks that Apple asked all reviewers to use.

sa15781.jpg695 x 459 - 45K

sa15781.jpg695 x 459 - 45K

sa15782.jpg695 x 418 - 41K

sa15782.jpg695 x 418 - 41K

sa15783.jpg697 x 542 - 52K

sa15783.jpg697 x 542 - 52K

sa15784.jpg693 x 525 - 47K

sa15784.jpg693 x 525 - 47K -

Back to Apple policies, info from medium size Youtube channel

For all video applications reviewer must use only formats that have support of build in M1 hardware accelerators. Tests that use other formats are allowed but must not take more than 15% of time compared to accelerated formats and it is preferable to not make any charts and focus on them. Tests that use supporter format but with parameters that lead to disabling of hardware acceleration are not allowed and can be done only in addition videos or separate texts.

Apple also provide detailed instructions on how test export time in FCP X (test is designed such so it allows FCPX to render before export start, also all transitions and effects are designed to not allow M1 cache size overflow or to use all CPU cores and GPU at the same time).

Not following Apple guidelines considering video applications result in APple stopping providing samples and all support, including huge views addition by hired people and bots for youtube channels. This source told that Apple guy who is his contact had been very proud about numbers of fake views that Apple can provide now to partners.

-

Tests for thoughts

-

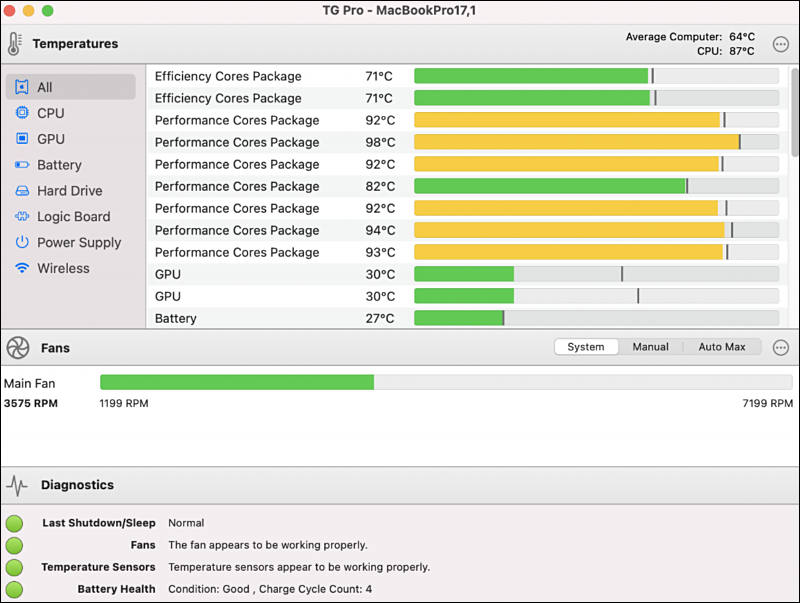

This is first sane video about throttling, instead "it is miracle" things made by non tech guys.

You really see task that load cores and GPU and cooling sucks.

You are getting 100 degrees CPU, you get 7200 RPM fan sounding like jet and it is literally nothing you can do.

To make cooling better Apple needs 2 fans and increase thickness (to use thicker fans).

This is effectively how good Windows notebooks are designed.

-

sa15861.jpg800 x 603 - 75K

sa15861.jpg800 x 603 - 75K -

I used this tool on several macbook pro 15.2 2012-2015 years. All these laptops heat up to 100, then they overclock the fan and continue to work like that. I think all macbooks from 2012y.o. doing the same thing. What's so bad about m1 also reaching 100 and slowing down? Even in this mode, it just does some things faster than other Intel laptops.

-

100 degrees on 14nm Intel chip and 100 degrees on totally integrated 5nm M1 with DRAM dies on top are not even remotely comparable. Such complex 5nm dense chip degradation will be 10-15x compared to simple Intel chip. If you look around at servers and GPUs that are used for everyday NN training - it is highly advisable to not go above 90 degrees, 95 degrees is considered top allowed temperature since 7nm chips generation.

This is first.

Her point was that people who got notebooks from Apple performed tests such a way (according to Apple guidelines) to make impression that M1 is totally different, always cool and fan will be silent even during rendering. It is the case only if you do specific settings test.

-

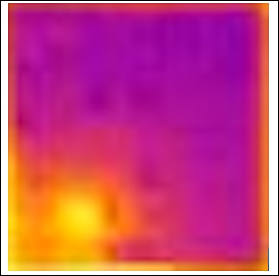

More on M1

Reusing A12X packaging line that originate from internal LSI design failure.

Bad thermal performance with cores concentrated in small area, during stress temperatures can reach critical 105-107 degrees.

Complex interconnects

Lot of blocks

sa15935.jpg711 x 413 - 41K

sa15935.jpg711 x 413 - 41K

sa15933.jpg279 x 276 - 10K

sa15933.jpg279 x 276 - 10K

sa15934.jpg800 x 396 - 59K

sa15934.jpg800 x 396 - 59K

sa15932.jpg792 x 766 - 106K

sa15932.jpg792 x 766 - 106K -

@VK The thermal envelope risk are the first points you make which aren't mitigable. So it's a dragster, it runs fast the quarter mile race. OTOH this is the use case for the form factor these chips launched in. Color me worried if the future ARM iMac and Mac Pro will run the same thermal risk. I think we'll see some advanced cooling on those, embedded in silicon. The technology exists, but maybe not for 3/5 nm processes.

-

Another interesting test

-

Why this guy uses Air and complains that it overheats? Air is not made for any heavy render, despite paid reviewers claims.

-

I think the point is to do some testing after many have suggestively written that these computers would "handle a 4k timeline". On the other hand I myself have evaluated similar possibilities (despite a not excellent CPU in the Macbook Air) in the past, with the old models that could connect to eGpu. Many of the slogans said are denied, or at least questioned, by this test.

-

Adobe has released an Arm version of Premiere Pro for macOS, allowing owners of new Apple Silicon M1 Macs to run the video editing software natively.

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,964

- Blog5,723

- General and News1,342

- Hacks and Patches1,151

- ↳ Top Settings33

- ↳ Beginners254

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,361

- ↳ Panasonic990

- ↳ Canon118

- ↳ Sony154

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm99

- ↳ Compacts and Camcorders299

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras121

- Skill1,961

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips267

- Gear5,414

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,579

- ↳ Follow focus and gears93

- ↳ Sound498

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff272

- ↳ Power solutions83

- ↳ Monitors and viewfinders339

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,319