It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

@Kurth. I have a late 2011 Mini server. USB is 2.0, not 3.0. I upgraded RAM to 16GB and its 2 hard drives to 500GB SSD's. Also, it cannot be upgraded past High Sierra without a hack. I have to make proxies for FCPX, but otherwise, there are no performance issues. I'll be in the market for a replacement when funds are available. I'll wait on M1 machines until the smoke clears and we have some honest reviews.

-

DaVinci Resolve is getting updated with support for Arm-powered Macs, and a beta version will be ready for owners on day one. Blackmagic Design released a beta of Resolve version 17.1 today that includes support for Apple’s new M1 chip. That means when the first of these new Macs ship next week, buyers will be able to dive straight into a native version of a key filmmaking tool.

-

On Adobe’s Compatibility page, the company states definitively that while Apple has made it clear many apps will continue to run on Rosetta 2 emulation on the M1 chip – even saying that some apps may even run better than they would on native Intel chips – Adobe Lightroom Classic is not considered compatible with this method.

Caution: Running Adobe apps under Rosetta 2 emulation mode on Apple devices with Apple Silicon M1 processors is not officially supported. Native support is planned.

“We’re excited to bring CC apps to Apple Silicon devices, including native support for Lightroom next month and Photoshop in early 2021,” an Adobe representative said. “Regarding Lightroom Classic, the team is working on a native version of Lightroom Classic for Apple Silicon, and it will be released next year. We’re also committed to continuing support for Intel-based Macs.”

Rumors are that Lightroom Class can never make it.

Also almost all developers related to Apple platform are moved to M1, this included both BM and Adobe.

-

I'm very curious to see the later M model 16" MacBook Pros and iMacs. I don't care if you call it an iPhone, as long as it runs Final Cut Pro (and Resolve, apparently) faster, it is a machine of interest to me.

-

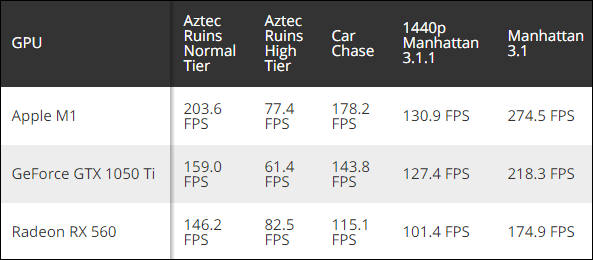

Apple PR continue to leak benchmarks

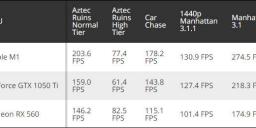

With GPU situation is not so good, so the preferred weird benchmark made for mobile class chips mostly (GFXBench 5.0). It is never used for any serious desktop benchmarks.

sa15467.jpg593 x 260 - 29K

sa15467.jpg593 x 260 - 29K -

Rumors are that Apple made unprecedented efforts focusing on benchmarks.

Rosetta 2 even custom recompile and replace parts of benchmarks to show nice performance in emulation mode.

Same had been done with top 50 most used applications (in parts that are used in benchmarks, not everywhere).

sa15470.jpg765 x 403 - 50K

sa15470.jpg765 x 403 - 50K -

These benchmarks remind me of something similiar:

"Volkswagen admitted that 11 million of its vehicles were equipped with software that was used to cheat on emissions tests."

-

Apple can't fail this time.

Belt yourself up for first two waves of reviews, Apple will check all and everyone of major ones.

They made nice CPU, but if marketing department will fail to show how superb it is - they can follow the way of Windows RT.

-

Adobe is releasing Arm versions of Photoshop for Windows and macOS today. The beta releases will allow owners of a Surface Pro X or Apple’s new M1-powered MacBook Pro, MacBook Air, and Mac mini to run Photoshop natively on their devices. Currently, Photoshop runs emulated on Windows on ARM, or through Apple’s Rosetta translation on macOS.

Native versions of Photoshop for both Windows and macOS should greatly improve performance, just in time for Apple to release its first Arm-powered Macs. While performance might be improved, as the app is in beta there are a lot of tools missing. Features like content-aware fill, patch tool, healing brush, and many more are not available in the beta versions currently.

Actually it is bad for Apple, as it also provide way to compare M1 to other ARM processors right at the reviews start.

-

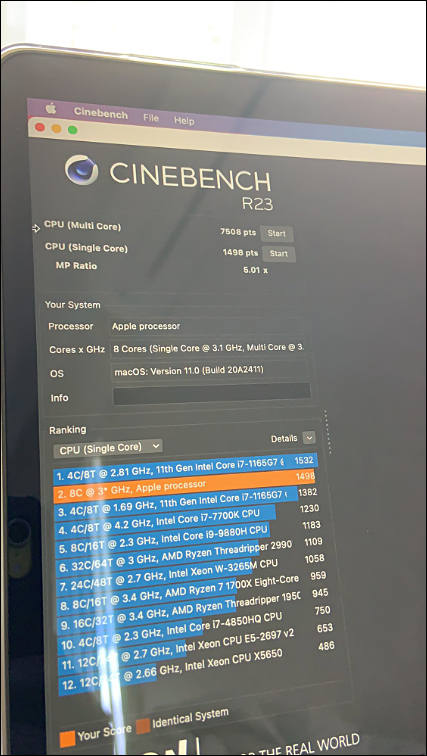

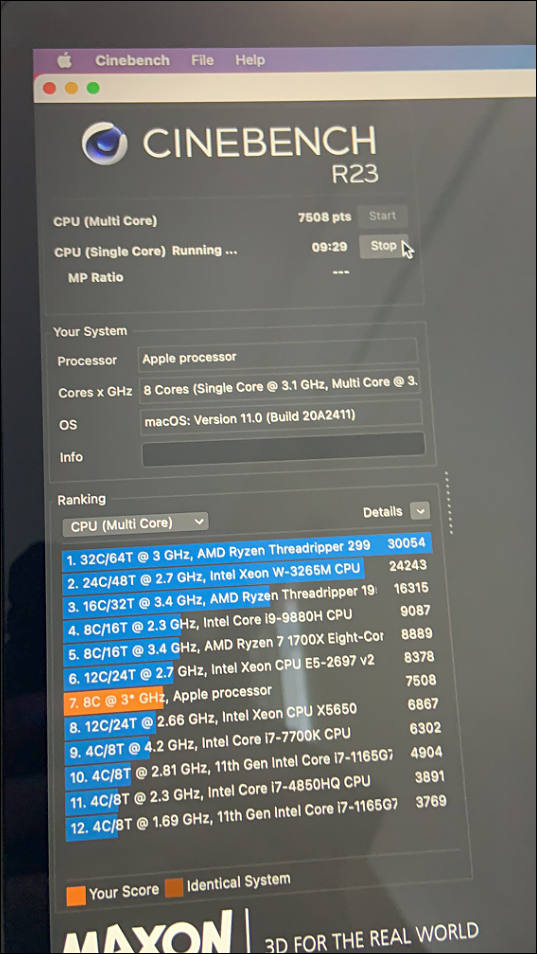

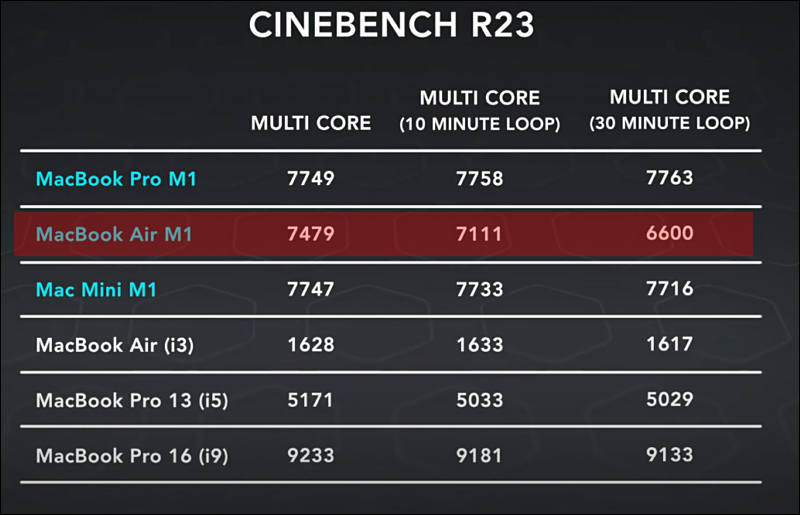

Cinebench R23, new version where Apple wrote all the M1 routines

Note that numbers here are possible also as big amount of Apple team worked with all major benchmark makers to write totally custom code for M1.

sa15477.jpg494 x 500 - 39K

sa15477.jpg494 x 500 - 39K

sa15478.jpg650 x 122 - 15K

sa15478.jpg650 x 122 - 15K -

MacBook Pro results

sa15482.jpg427 x 756 - 57K

sa15482.jpg427 x 756 - 57K

sa15483.jpg537 x 954 - 83K

sa15483.jpg537 x 954 - 83K -

@Vitaliy_Kiselev When you write "worked with all major benchmark makers to write totally custom code for M1."

should I take that along the lines of #ifdef __arm_M1, where the code is optimized for the architecture (unified memory, etc), but otherwise functionally identical? Of course you can't answer this first hand. That's not something to blame them for, it's like seeing #ifdef __sparcv9 in old code. Until their architecture is well known to developers, it's understandable they'd be eager to put their best foot forward. Unless there's cheating.

-

Let me explain.

Average developer is using available info and almost same code across platforms.

Apple with benchmarks use ALL 100% private info they have about their CPUs, they hand optimize every instruction and every loop, if some jump makes hit due to wrong branch prediction - they rewrite it so branch prediction makes proper guess. It is no longer some C code, but huge amount of ARM low level assembler inserts that are also using all VLIW instructions such a way that is impossible to reach by compiler itself.

It is very complex process, where on one top benchmark like Cinebench you can have up to 100 developers working each day for 4-5 months on very small amount of code.

Btw, AMD also did the same thing (with much smaller scale) with their latest Ryzen processors for top benchmarks and around 30-40 top games. So it is not only efficiency of CPUs that is rising, it is also huge efforts being pushed into optimization of core code components, including popular libraries. Intel also has thousands of developers working on such things in tight tandem with benchmarks, games and scientific packages developers.

-

Some more on Apple methods

The first footnote on their website (Mini page) - they measured performance per watt as peak (!) performance to average (!) power consumption.

This is scam, guys.

-

@Vitaliy_Kiselev wrt "where on one top benchmark like Cinebench you can have up to 100 developers working each day for 4-5 months on very small amount of code." If that optimization effort is only towards the benchmark code path, then it can be easily considered cheating.

If OTOH it benefits the app as a whole, then I don't know (as long as they do it for Resolve too lol), I mean the users of Cinebench won't mind the performance increase. I get your point that those VLIW instructions wouldn't have been issued by the compiler, but that speaks more of the compiler than anything else. I wouldn't consider Intel's and AMD's involvement with optimizing external libraries and games as cheating.

-

It is on benchmark.

As I said, all top hardware firms do this, and all big benchmark authors LIVE on this.

But they also have special schemes to making it almost impossible to catch them legally. As I understand Apple people working on it are not legally working at Apple or their direct contractor at the time. And code transitions and transfers, as well as discussion, happen very similar to how CIA agents communicate on foreign soil.

So, even if it'll be huge leak and scandal - benchmark author just declare that it is their new contractors team working at adding support for new processor and go prove otherwise.

DxO is very famous for helping adjust and optimize for their photo and video benchmarks (it means that they tell intricate details on how to do this).

AnTuTu for example had been hunted by Google on request of Qualcomm and other US companies, as it is not controlled by US and EU company.

-

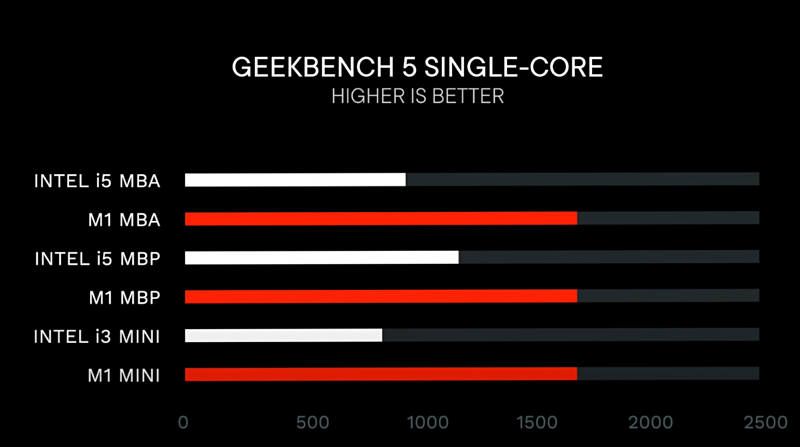

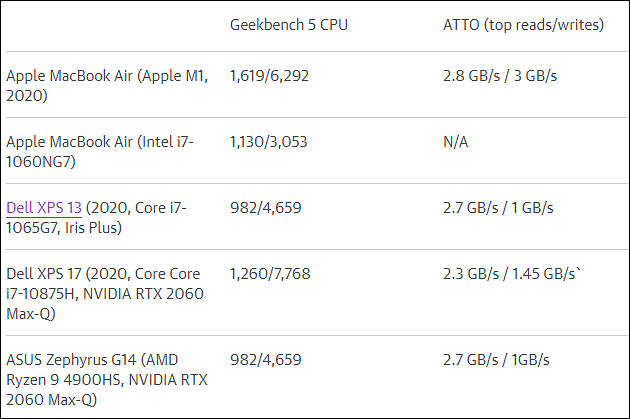

Geekbench is officially recommended benchmark for reviewers by Apple

Ignore its results!

https://www.engadget.com/apple-macbook-air-m1-review-140031323.html

sa15486.jpg630 x 419 - 50K

sa15486.jpg630 x 419 - 50K -

Also they try now to prize such SSD speeds - 256 GB SSD

- M1 MacBook Air: 2,190.1 MB/s write, 2,676.4 MB/s read (on benchmark in post above SSD size is bigger)

- 2019 MacBook Air: 1,007.1 MB/s write, 1,319.7 MB/s read

Note that ti is useless SLC cache speeds, not normal test, and it is nothing to write home about.

-

Reviews stink

It is like bunch of idiots who make them.

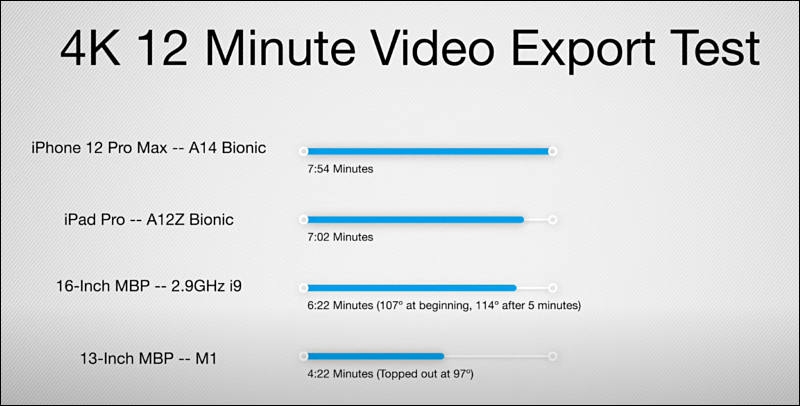

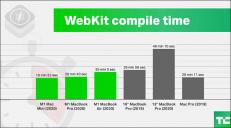

sa15488.jpg800 x 406 - 43K

sa15488.jpg800 x 406 - 43K

sa15489.jpg800 x 395 - 37K

sa15489.jpg800 x 395 - 37K -

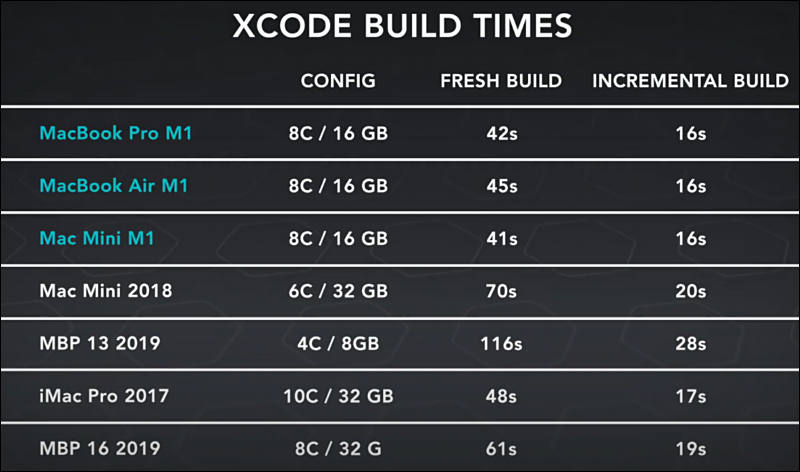

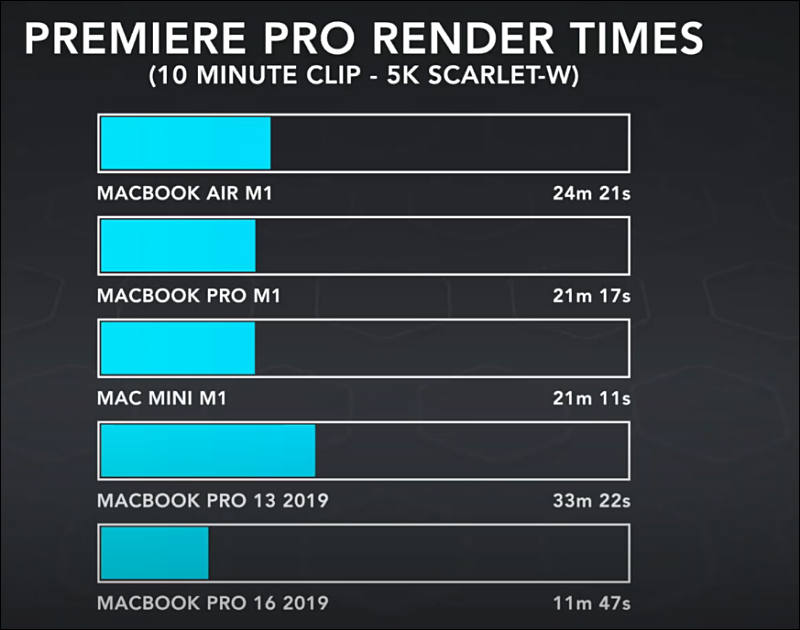

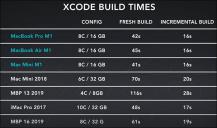

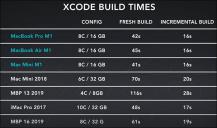

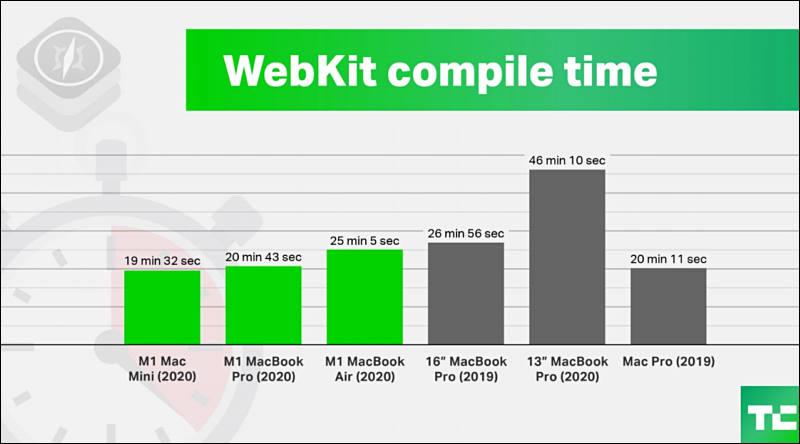

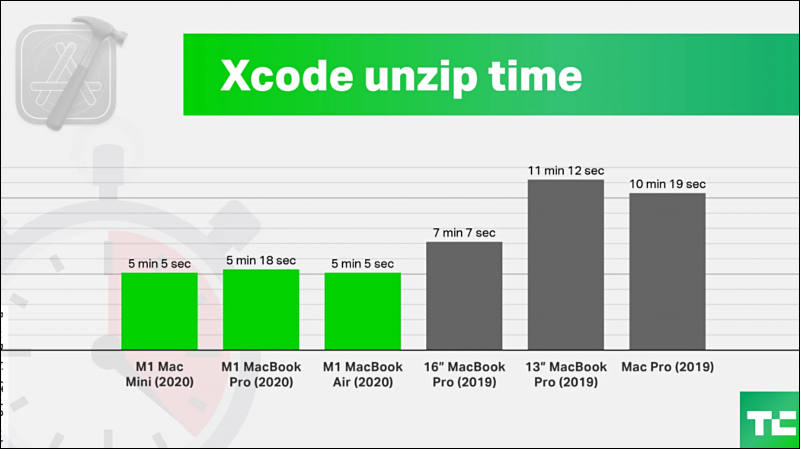

Throttling

Idea to show build with lot of small files as independent CPU test, it is not

He should use identical external fast Thunderbolt SSD.

Render time is nice, but it is also not all CPU cores, it is media accelerator job to do encoding and GPU all else

Premiere is not very nice for now due to not using GPU and accelerator as needed

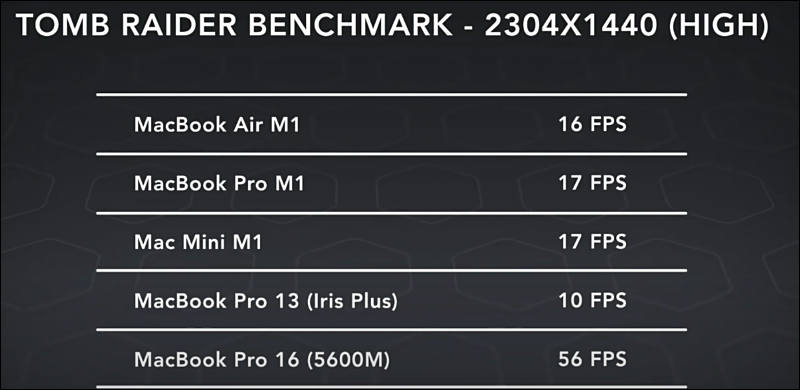

Games suck as GPU small and is mobile class

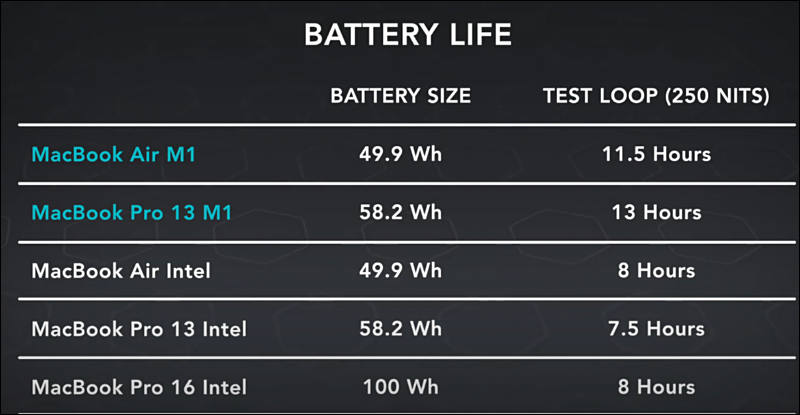

Battery life claims are false

So, nice and coordinated with Apple review, as it is not made for so hardcore Apple fans

sa15493.jpg800 x 515 - 52K

sa15493.jpg800 x 515 - 52K

sa15494.jpg800 x 472 - 50K

sa15494.jpg800 x 472 - 50K

sa15494.jpg800 x 472 - 50K

sa15494.jpg800 x 472 - 50K

sa15495.jpg676 x 692 - 64K

sa15495.jpg676 x 692 - 64K

sa15496.jpg800 x 630 - 55K

sa15496.jpg800 x 630 - 55K

sa15497.jpg800 x 390 - 39K

sa15497.jpg800 x 390 - 39K

sa15498.jpg800 x 415 - 42K

sa15498.jpg800 x 415 - 42K -

Same wrong benchmark approach

sa15500.jpg800 x 444 - 45K

sa15500.jpg800 x 444 - 45K

sa15501.jpg800 x 449 - 43K

sa15501.jpg800 x 449 - 43K -

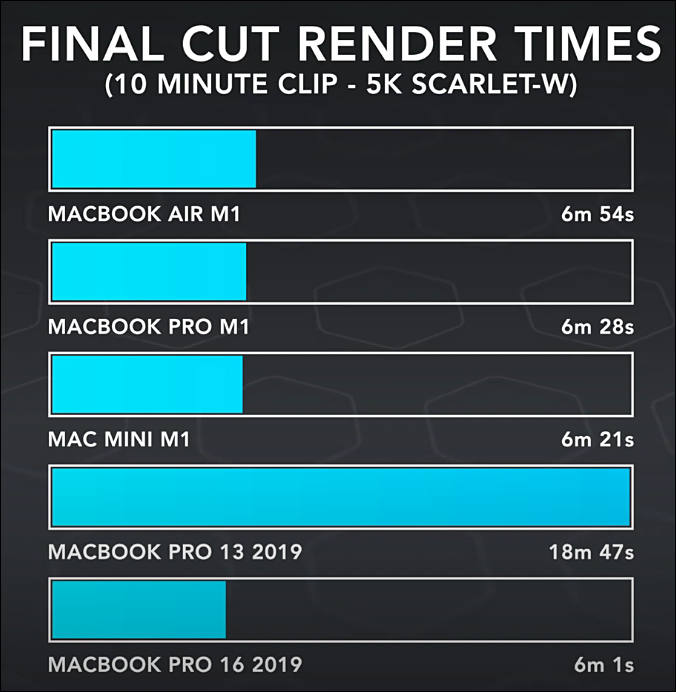

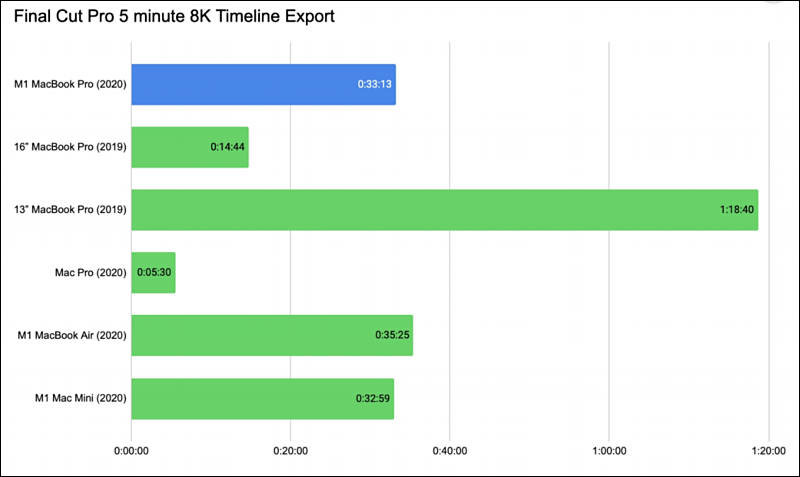

All is super inconsistent

As I being told it is VERY important to not hit any filter or operation that is CPU intensive for M1 to be good.

Hence almost all FCP tests Apple asked to make are simple transitions and small color adjustments, plus fast hardware accelerated compression.

sa15502.jpg800 x 477 - 34K

sa15502.jpg800 x 477 - 34K -

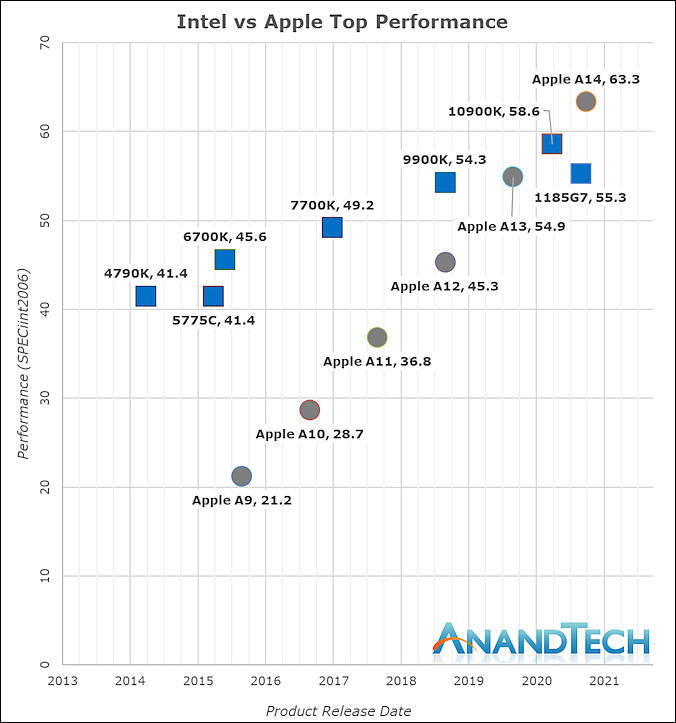

Some people love to show this

It is just one issue - Intel expanses on desktop CPU cores had been around 1/5-1/50 during this chart time of expenses Apple made on their main LSI.

sa15503.jpg676 x 723 - 65K

sa15503.jpg676 x 723 - 65K

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,970

- Blog5,724

- General and News1,346

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners255

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,360

- ↳ Panasonic990

- ↳ Canon118

- ↳ Sony155

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm100

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras117

- Skill1,961

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips267

- Gear5,414

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,579

- ↳ Follow focus and gears93

- ↳ Sound498

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff272

- ↳ Power solutions83

- ↳ Monitors and viewfinders339

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,319