Please, support PV!

It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

Pro: AVCHD Quantization process

-

Sorry, just tired, I fixed original post.

-

I had a look at the H.264 reference code (available at http://iphome.hhi.de/suehring/tml/) and if you look at the routines having to do with quantization (they are named Qxxxxxx in the header and c code sections) you can see structural similarities with the GH2 tables. The numbers inside the structures are different however. The reference code is not optimized (it's very, very slow), so there is no pre-calculation of table values, as I suspect Panasonic is doing. Another place to look is the x264 codec (available at http://www.videolan.org/developers/x264.html) which is optimized. I haven't gone through that yet, but I am somewhat hopeful that there will be some hints in there for us.

As to the numbers you mentioned related to GOP length. Actually, they seem more related to framerate, corresponding to the GOP closest to 1 second. I vaguely recall the same parameters in the GH1 codec. I don't remember what the value for 1080/50 was, whether it was 40, 50, 0r 52 (because the GOP was 13 for the GH1). I had surmised that it had something to do with when frames are flushed to flash memory, but I'm not sure.

Chris -

Yes it is frames number having whole GOPs that is closes to one second.

-

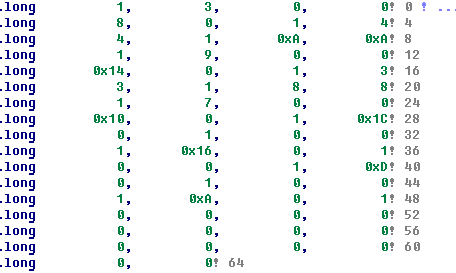

About 48 elements tables.

My current understanding is that this is 3 4x4 tables in zigzag pattern.

What do you think? -

On the 48 entry tables - that's the conclusion I came to - otherwise the sequence of quantization values doesn't make sense. That might imply that quantization (and therefore other coding functions as well) happen in a 4:4:4 color sample environment and that color sub-sampling happens later. Actually, that makes sense because if you want a codec that can handle other color sample schemes you would only want one coding engine common to all color sub-sample schemes and do the sub-sampling at the end; it results in much simpler code. Either that or there are simply 4x as many blocks for the Y component as the others. I doubt that, though, because that would make motion vector calculations more complicated. There might be something interesting to explore here. To some extent I would assume that Panasonic has a common codec that is used across several products; otherwise code maintenance would be a nightmare.

I have no idea what the 66 entry table might be. I'll look at the reference codec to try to locate any 66 entry tables.

Chris -

-

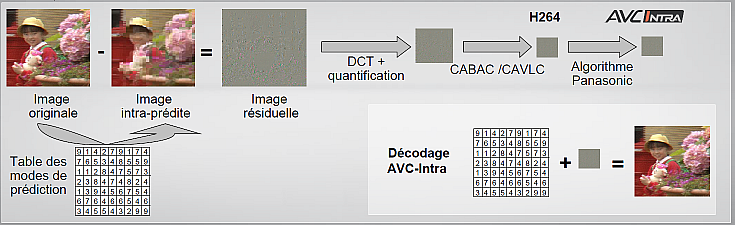

Other interesting stuff from http://www.ficam.fr/upload/documents/AVCIntra.pdf

This can be also table consisting from prediction modes codes.

quant2.png735 x 225 - 129K

quant2.png735 x 225 - 129K -

Vitaliy,

"For 1080p24 we have 24 (GOP=12), 60 for 1080i60 (GOP=15), 48 for 1080i50 (GOP=12)."

Is there a patch in PTool for these values (I couldn't find it)? It may be necessary to change these if the GOP length is changed in order to maintain stability, or for some other reason.

Chris -

@cbrandin

About tables - you resolve them looking at references. They way compiler works it is extremely rare to have such tables to be 52 size, in fact.

This table I referenced is acessed as i*24+offsets.

>Is there a patch in PTool for these values (I couldn't find it)? It may be necessary to change these if the GOP length is changed in order to maintain stability, or for some other reason.

Maybe, I just prefer doing things step by step.

We already have too much of testers patches. -

-

I meant that there are few references because offsets are used - that can make it more difficult to identify where one table starts and ends. It seems that the tables you are looking at are actually 24 tables each. Or, are they i tables of 24 (or, 6 if long) entries? Anyway, it means that you have to examine the code to figure the tables out because there aren't references that separate them. Not a big deal, just more work. It can get complicated if the tables were created from arrays of structures of mixed data types.

-

I think I found something interesting about quantization. I've been looking at streams with StreamEye to see how macroblocks are being quantized. At the beginning of a stream (during the "blip"), or if low bitrates are used, the quantization values for macroblocks range from 26-51. That's to be expected as the higher the quantization value the lower the detail. The interesting thing is what happens once high bitrate streams settle past the "blip" stage. With Panasonic factory firmware, and 24H cinema mode, all macroblocks are coded with a quantization value of 20. With higher bitrates (I tried up to 42000000) macroblocks are still coded with a macroblock quantization value of 20. I've never seen a value below 20 - it's as if that is a hard coded limit. It seems to me that with higher bitrates we should see lower quantization values. Wherever the extra bitrate bandwidth is going it's not going into higher resolution macroblocks.

Chris -

Look here:

http://onlinehelp.avs4you.com/Appendix/AVSCodecSettings/H264AdvancedSettings/ratecontrol.aspx

It seems that quantization limits are programmable with some codecs. Adding a parameter to adjust the minimum settings seems appropriate. Right now it appears that the min value is set to 20 and the max value is set to 51 (which goes to the end of the tables) in the firmware. -

On that same page you'll see explanations for "keyframe boost" and "b frame reduction". I think this is similar to the "top" and "bottom" parameters in PTool in that they affect the relative sizes of I frames and B frames (and possibly P frames, but I don't know). That would mean you have to be careful about how you set them, maintaining reasonable ratios. Maybe they should be scaled in proportion to the bitrate without changing the ratio between them.

Chris -

I know the bare minimum about this stuff but something I read kind of spurred a thought.

So it looks like the codec quantization goes from top to bottom of the frame and the first second or so of the stream seems to be lower quality/bitrate, so could that mean that the panny version of the codec is written to go through one cycle of GOP to build up enough data to estimate proper encoding? -

No, quantization happens at the macroblock level (there are about 4000 of them in a 1080p frame). A lot has to happen when a stream starts, so I think there is a throttle at the beginning. With the GH13 we found that it was important to start slow, otherwise the camera crashed instantly.

Start New Topic

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,976

- Blog5,724

- General and News1,351

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners255

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,361

- ↳ Panasonic991

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm100

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,961

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips267

- Gear5,414

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,579

- ↳ Follow focus and gears93

- ↳ Sound498

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff272

- ↳ Power solutions83

- ↳ Monitors and viewfinders339

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,319