It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

In digitization of both audio and video, the two main quality issues are aliasing and quantization. With audio, steep high-cut filters allow alias-free sample rates as low as 44Khz, and 24-bit ADC's have imperceptible quantization noise. Video digitization is nowhere near as clean. You can readily spot aliasing patterns in shots of standard resolution charts, and banding of smooth gradients is a frequent pitfall.

-

@alcomposer "But 32 video channels currently can only be edited together, and viewed one at a time, unlike audio."

So when I do a compositing job with over 50 layers, are you suggesting that I really can't see all my layers and I'm only imagining them? Your knowledge of video systems needs a little refresh!

-

It all went.... well... far.

-

So when I do a compositing job with over 50 layers, are you suggesting that I really can't see all my layers and I'm only imagining them? Your knowledge of video systems needs a little refresh!

Gosh I didn't know you always do compositing on EVERY multicam shoot you do! Unlike audio which is ALWAYS composited together when using multiple microphones.

Normally I only open up Fusion for VFX work. I didn't think that was the discussion.

Also, normally I don't ALWAYS use video in compositing, sometimes mattes, or deep pixel layers. So no, all those channels are not always going to be seperate video media assets, as they are in audio land.

Also currently most compositors are not optimised for GPU acceleration (CUDA can also be slow here as well, simply using GLSL could solve speed issues). Which is a huge problem. Even simple blend operations are still carried out on the CPU, something that a shader could easily achieve, at 32bit precision, in a fraction of the time.

Even decoding of video could be shifted from CPU to GPU, something that needs to happen asap. There are a few accelerated codecs (such as HAP) however they are not lossless enough for production work, only realtime work. (Look into Touch Designer, Vuo for realtime video mixing / effects processing).

Can we please not get personal? I would love to have a great discussion about this without being questioned, I have never questioned your knowledge. I'm sure we are all professionals here, with great bodies of work. It's not helpful having a discussion in which we just throw random: "your knowledge needs a refresh" comments.

This whole discussion has got out of hand. I've greenscreened 360 VR content with up to 100 layers 4k, and was able to playback in realtime... so no need for a refresh.

I don't understand the strawmanning, considering my last comment on this super offtopic debate:

Actually, I have realised though this discussion that there is a huge problem with my logic: We need a way to quantify the quality of both audio and video capture systems. If audio is "much simpler" then we have to quantify at what "quality" is it simpler. Lets say we capture an SD video image of an orchestra, and a super high audio recording: are they both similar? No. Why? Because the audio recording will sound much more real than the video recording. I would suggest that 4k 60p is the equivalent of a good audio recording in making the viewer feel like they were there "in the room". So with that logic, and definition of similar quality then yep, video wins the complexity race.

All because I would like to use video like audio, I didn't think this was a debatable topic. Video is getting easier to work with, try to do 100 4k layers realtime on a 10 year old PC.

As was mentioned before: video is transitioning into a more robust format similar to the evolution of audio did, (16 bit -> 24 bit).

Also remember 32 bit audio is just around the corner, with AD systems that employ the use of multiple AD stages to make distortion and peeking a thing of the past. Maybe audio is the medium that is playing catch up! ;-)

-

@ alcomposer "Can we please not get personal?" and "Gosh I didn't know you always do compositing on EVERY multicam shoot you do!"

I would really like to see any system that can composite 100 streams of 4k in real time! Current editing systems are struggling with 3+ layers in real time.

I made no mention of multicam or Fusion. I think this is the end of a non-productive discussion. The difference in our knowledge of the underlying technology is making effective communication impossible.

Also "Because the audio recording will sound much more real than the video recording." I laughed so hard! What a fabulous dry-humour joke! I love it!

-

@caveport i think this thread should be brought back to @Vitaliy_kiselevs topic.

Which personally I think is quite interesting. All computer storage systems have bit depth. Even UTF-8. So this discussion is intrinsic of all computer storage systems, which digital video is just one of.

-

A calibrated studio SDR monitor will emit a maximum of 100 nits. Where a normal SDR studio monitor may reproduce black between 1 and 2 nits, the Canon DP-V2410 HDR monitor is capable of reproducing black at 0.025 nits.

From canon sponsored HDR diatribe

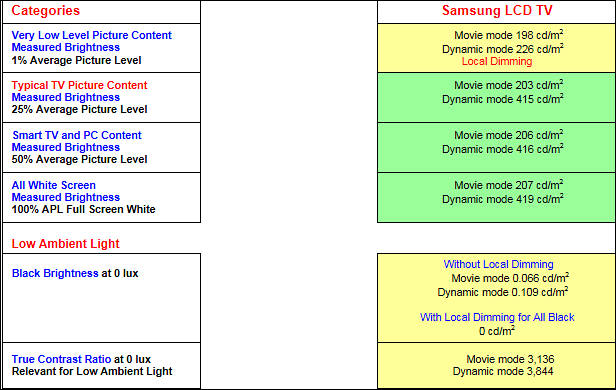

This is pure lie, here is not too good on camera IPS (VA is much better)

- White field - 122 cd/m2

- Dark field - 0,183 cd/m2

Lie is around 10x times, even for IPS. Desktop IPS monitors are better. And VA are much better.

Samsung typical TV (VA panel)

Most important for HDR (especially LCD based) lie is to avoid human vision science and especially how adaptation works.

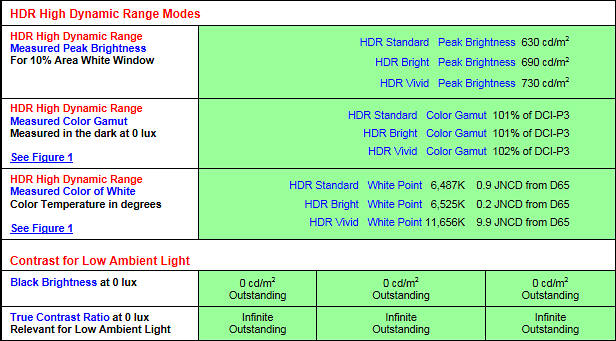

OLED measurements (standard 2016 LG TV)

Yet plasma like behavior

sample849.jpg616 x 341 - 76K

sample849.jpg616 x 341 - 76K

sample850.jpg614 x 221 - 63K

sample850.jpg614 x 221 - 63K

sample851.jpg616 x 390 - 67K

sample851.jpg616 x 390 - 67K

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,979

- Blog5,725

- General and News1,352

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners255

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,362

- ↳ Panasonic991

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm100

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,961

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips267

- Gear5,414

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,579

- ↳ Follow focus and gears93

- ↳ Sound498

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff272

- ↳ Power solutions83

- ↳ Monitors and viewfinders339

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,319